Oracle INSERT Statement: Behind the Scenes

Learn how Oracle executes INSERT statements internally. Complete guide covering parsing, undo segments, redo logs, buffer cache, locks, constraints, and commit process with practical examples for DBA interviews.

When you execute a simple INSERT INTO employees VALUES (101, 'John', 'Doe', 50000); statement, Oracle performs a complex series of operations involving memory structures, redo logs, undo segments, and data files. Understanding this internal process is crucial for database administrators and developers, especially during performance tuning and troubleshooting.

In this comprehensive guide, we’ll explore the complete journey of an INSERT statement from the moment you execute it until the data is permanently stored in the database.

The Complete INSERT Statement Execution Flow

┌─────────────────────────────────────────────────────────────────┐

│ USER EXECUTES INSERT STATEMENT │

│ INSERT INTO employees VALUES (101,'John','Doe',50000); │

└──────────────────────────┬──────────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────────┐

│ STEP 1: SYNTAX CHECK │

│ • Validate SQL syntax │

│ • Check INSERT keyword usage │

│ • Verify parentheses and commas │

└──────────────────────────┬──────────────────────────────────────┘

│

[Valid Syntax?]

│

Yes ───┼─── No → Error: ORA-00936

│ (Missing expression)

▼

┌─────────────────────────────────────────────────────────────────┐

│ STEP 2: SEMANTIC CHECK │

│ • Does table exist? │

│ • Do columns exist? │

│ • Does user have INSERT privilege? │

│ • Check NOT NULL constraints │

└──────────────────────────┬──────────────────────────────────────┘

│

[Objects Valid?]

│

Yes ───┼─── No → Error: ORA-00942/ORA-01400

│

▼

┌─────────────────────────────────────────────────────────────────┐

│ STEP 3: SHARED POOL CHECK │

│ • Generate SQL hash value │

│ • Search Library Cache for execution plan │

│ • Hard parse vs Soft parse decision │

└──────────────────────────┬──────────────────────────────────────┘

│

[Plan Found?]

│

┌──────────────┴──────────────┐

│ │

Yes No

(Soft Parse) (Hard Parse)

│ │

│ ▼

│ ┌─────────────────────────────────────┐

│ │ Create Execution Plan │

│ │ • Parse SQL │

│ │ • Validate constraints │

│ │ • Check triggers │

│ │ • Store plan in cache │

│ └──────────────┬──────────────────────┘

│ │

└──────────────┬──────────────┘

│

▼

┌─────────────────────────────────────────────────────────────────┐

│ STEP 4: ALLOCATE UNDO SPACE │

│ • Reserve space in UNDO tablespace │

│ • Create undo record (before image) │

│ • Link transaction to undo segment │

│ • Generate unique Transaction ID │

└──────────────────────────┬──────────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────────┐

│ STEP 5: ACQUIRE LOCKS │

│ • Get TX (Transaction) lock on row │

│ • Lock the table in Row Exclusive (RX) mode │

│ • Prevent conflicting DML operations │

└──────────────────────────┬──────────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────────┐

│ STEP 6: DATA BLOCK SEARCH │

│ • Find appropriate data block with free space │

│ • Check high water mark (HWM) │

│ • Use freelist or ASSM (Automatic Segment Space Mgmt) │

└──────────────────────────┬──────────────────────────────────────┘

│

[Free Space Found?]

│

┌──────────────┴──────────────┐

│ │

Yes No

│ │

│ ▼

│ ┌─────────────────────────────────────┐

│ │ Allocate New Data Block │

│ │ • Extend segment if needed │

│ │ • Format new block │

│ │ • Update HWM │

│ └──────────────┬──────────────────────┘

│ │

└──────────────┬──────────────┘

│

▼

┌─────────────────────────────────────────────────────────────────┐

│ STEP 7: CHECK CONSTRAINTS │

│ • Validate NOT NULL constraints │

│ • Check CHECK constraints │

│ • Verify UNIQUE constraints │

│ • Validate FOREIGN KEY constraints │

│ • Verify PRIMARY KEY uniqueness │

└──────────────────────────┬──────────────────────────────────────┘

│

[Constraints Valid?]

│

Yes ───┼─── No → Error: ORA-00001/ORA-02291

│ Rollback changes

▼

┌─────────────────────────────────────────────────────────────────┐

│ STEP 8: GENERATE REDO RECORD │

│ • Create redo change vector │

│ • Write to Redo Log Buffer (memory) │

│ • Record: Block address, SCN, change info │

│ • Ensure recoverability │

└──────────────────────────┬──────────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────────┐

│ STEP 9: INSERT INTO BUFFER CACHE │

│ • Copy data block to Database Buffer Cache │

│ • Mark block as "dirty" (modified) │

│ • Insert new row into block │

│ • Update block header (row directory) │

│ • Assign ROWID to new row │

└──────────────────────────┬──────────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────────┐

│ STEP 10: UPDATE INDEXES │

│ • Insert entries in ALL indexes on table │

│ • Generate redo for index changes │

│ • Update index blocks in buffer cache │

│ • Mark index blocks as dirty │

└──────────────────────────┬──────────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────────┐

│ STEP 11: FIRE TRIGGERS (if any) │

│ • Execute BEFORE INSERT triggers │

│ • Execute AFTER INSERT triggers │

│ • Triggers can modify data or reject INSERT │

└──────────────────────────┬──────────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────────┐

│ STEP 12: RETURN SUCCESS │

│ • Return "1 row inserted" message │

│ • Transaction remains UNCOMMITTED │

│ • Changes visible only to current session │

│ • Locks held until COMMIT or ROLLBACK │

└──────────────────────────┬──────────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────────┐

│ WAITING FOR COMMIT OR ROLLBACK │

│ Changes are in memory (Buffer Cache) - NOT on disk yet │

└─────────────────────────────────────────────────────────────────┘

│

┌──────────┴──────────┐

│ │

COMMIT ROLLBACK

│ │

▼ ▼

┌──────────────────┐ ┌──────────────────────┐

│ STEP 13: COMMIT │ │ STEP 13: ROLLBACK │

│ • Write redo to │ │ • Read undo segment │

│ disk (LGWR) │ │ • Restore old values │

│ • Release locks │ │ • Release locks │

│ • Make permanent │ │ • Discard changes │

└──────────────────┘ └──────────────────────┘Detailed Step-by-Step Breakdown

Step 1: Syntax Check

Oracle’s SQL parser first validates the syntax of your INSERT statement.

What Oracle Checks:

- Proper INSERT syntax

- Correct keyword usage (INSERT, INTO, VALUES)

- Balanced parentheses and proper comma placement

- Valid SQL structure

Examples of Syntax Errors:

-- Missing INTO keyword

INSERT employees VALUES (101, 'John');

-- ORA-00928: missing SELECT keyword

-- Missing VALUES keyword

INSERT INTO employees (101, 'John');

-- ORA-00936: missing expression

-- Unbalanced parentheses

INSERT INTO employees VALUES (101, 'John';

-- ORA-00907: missing right parenthesis

-- Correct syntax

INSERT INTO employees VALUES (101, 'John', 'Doe', 50000);

-- SuccessStep 2: Semantic Check

After syntax validation, Oracle verifies the logical correctness.

What Oracle Verifies:

- Table exists in the database

- Columns exist (if column list specified)

- User has INSERT privilege on the table

- Data types are compatible

- Column count matches value count

Examples:

-- Table doesn't exist

INSERT INTO employee_data VALUES (101, 'John');

-- ORA-00942: table or view does not exist

-- Column doesn't exist

INSERT INTO employees (emp_id, first_name, invalid_col)

VALUES (101, 'John', 'Value');

-- ORA-00904: "INVALID_COL": invalid identifier

-- Column count mismatch

INSERT INTO employees (employee_id, first_name)

VALUES (101, 'John', 'Doe');

-- ORA-00913: too many values

-- No INSERT privilege

INSERT INTO hr.salary_data VALUES (101, 50000);

-- ORA-01031: insufficient privileges

-- Correct

INSERT INTO employees (employee_id, first_name, last_name)

VALUES (101, 'John', 'Doe');

-- SuccessStep 3: Shared Pool Check (Parse Phase)

Oracle generates a hash value and searches the Library Cache.

Two Scenarios:

A) Soft Parse (Fast – Cache Hit)

-- First execution (Hard Parse)

INSERT INTO employees VALUES (101, 'John', 'Doe', 50000);

-- Second execution with same literal values (Soft Parse)

INSERT INTO employees VALUES (101, 'John', 'Doe', 50000);

-- ✓ Plan found in cache - reusedB) Hard Parse (Slow – Cache Miss)

-- Different literal values = different SQL hash

INSERT INTO employees VALUES (102, 'Jane', 'Smith', 60000);

-- ✗ New SQL hash - requires hard parseBest Practice – Use Bind Variables:

-- Without bind variables (Bad - multiple hard parses)

INSERT INTO employees VALUES (101, 'John', 'Doe', 50000);

INSERT INTO employees VALUES (102, 'Jane', 'Smith', 60000);

INSERT INTO employees VALUES (103, 'Bob', 'Johnson', 55000);

-- Result: 3 different SQL statements = 3 hard parses

-- With bind variables (Good - single hard parse)

INSERT INTO employees VALUES (:emp_id, :fname, :lname, :sal);

-- Result: 1 hard parse, reused for all executionsCheck Parse Statistics:

SET LINESIZE 150

SET PAGESIZE 50

COLUMN name FORMAT A30

COLUMN value FORMAT 999,999,999

SELECT name, value

FROM v$sysstat

WHERE name LIKE '%parse%'

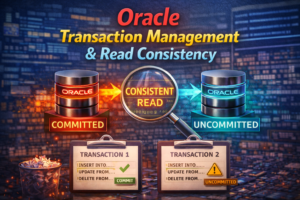

ORDER BY name;Step 4: Allocate Undo Space

Before making any changes, Oracle creates a “before image” in the undo tablespace.

Why Undo is Needed:

- ROLLBACK capability: Restore data if transaction fails

- Read Consistency: Other users see data as it was before your change

- Flashback queries: Query data as of past time

- Recovery: Rollback uncommitted transactions during crash recovery

Undo Process:

┌─────────────────────────────────────────┐

│ BEFORE INSERT EXECUTION │

│ │

│ employees Table: │

│ ┌─────┬──────┬──────┬────────┐ │

│ │ 100 │ King │ CEO │ 100000 │ │

│ └─────┴──────┴──────┴────────┘ │

└─────────────────────────────────────────┘

│

▼

┌─────────────────────────────────────────┐

│ UNDO SEGMENT CREATION │

│ │

│ Undo Record: │

│ • Transaction ID: 0x0a0b0c │

│ • SCN: 1234567 │

│ • Operation: INSERT (no before image) │

│ • Table: EMPLOYEES │

│ • Space reserved for potential ROLLBACK│

└─────────────────────────────────────────┘Monitor Undo Usage:

SET LINESIZE 180

SET PAGESIZE 100

COLUMN tablespace_name FORMAT A20

COLUMN status FORMAT A10

COLUMN size_mb FORMAT 999,999.99

COLUMN used_mb FORMAT 999,999.99

COLUMN free_mb FORMAT 999,999.99

SELECT tablespace_name,

status,

ROUND(SUM(bytes)/1024/1024, 2) size_mb,

ROUND(SUM(bytes - NVL(maxbytes,bytes))/1024/1024, 2) used_mb,

ROUND(SUM(maxbytes - bytes)/1024/1024, 2) free_mb

FROM dba_data_files

WHERE tablespace_name LIKE '%UNDO%'

GROUP BY tablespace_name, status;Check Active Transactions:

SET LINESIZE 180

COLUMN username FORMAT A15

COLUMN status FORMAT A10

COLUMN undo_seg FORMAT A15

COLUMN used_undo_mb FORMAT 999.99

SELECT s.username,

s.status,

t.used_ublk * 8192/1024/1024 used_undo_mb,

t.start_time,

s.sql_id

FROM v$transaction t

JOIN v$session s ON t.ses_addr = s.saddr

ORDER BY t.used_ublk DESC;Step 5: Acquire Locks

Oracle automatically acquires locks to prevent conflicting operations.

Lock Types During INSERT:

┌──────────────────────────────────────────────────────┐

│ LOCK HIERARCHY │

├──────────────────────────────────────────────────────┤

│ │

│ 1. TABLE LOCK (TM Lock) │

│ Mode: RX (Row Exclusive) │

│ Purpose: Prevent DDL on table │

│ Allows: Other DML operations │

│ │

│ 2. ROW LOCK (TX Lock) │

│ Mode: Exclusive on new row │

│ Purpose: Prevent duplicate inserts (if PK) │

│ Scope: Specific row being inserted │

│ │

└──────────────────────────────────────────────────────┘Lock Compatibility Matrix:

| Current Lock | Can Another Session INSERT? | Can Another Session UPDATE? | Can Another Session DELETE? | Can Another Session SELECT? |

|---|---|---|---|---|

| RX (Row Exclusive) | ✅ Yes | ✅ Yes (different rows) | ✅ Yes (different rows) | ✅ Yes |

View Active Locks:

SET LINESIZE 200

SET PAGESIZE 100

COLUMN object_name FORMAT A30

COLUMN lock_type FORMAT A15

COLUMN mode_held FORMAT A15

COLUMN username FORMAT A15

SELECT s.username,

o.object_name,

DECODE(l.type,

'TM', 'Table Lock',

'TX', 'Transaction Lock',

l.type) lock_type,

DECODE(l.lmode,

0, 'None',

1, 'Null',

2, 'Row Share',

3, 'Row Exclusive',

4, 'Share',

5, 'Share Row Exclusive',

6, 'Exclusive',

l.lmode) mode_held,

s.sid,

s.serial#

FROM v$lock l

JOIN v$session s ON l.sid = s.sid

JOIN dba_objects o ON l.id1 = o.object_id

WHERE s.username IS NOT NULL

ORDER BY o.object_name, s.username;Example – Lock Contention:

-- Session 1

INSERT INTO employees VALUES (101, 'John', 'Doe', 50000);

-- Lock acquired, transaction open (not committed)

-- Session 2 (tries to insert same primary key)

INSERT INTO employees VALUES (101, 'Jane', 'Smith', 60000);

-- Waits for Session 1 to COMMIT or ROLLBACK

-- If Session 1 commits: ORA-00001 (unique constraint violated)

-- If Session 1 rollbacks: Insert succeedsStep 6: Data Block Search and Space Allocation

Oracle searches for a data block with sufficient free space.

Free Space Management Methods:

A) Manual Segment Space Management (MSSM) – Legacy

┌─────────────────────────────────────┐

│ FREELIST METHOD │

├─────────────────────────────────────┤

│ │

│ 1. Check segment header │

│ 2. Traverse freelist │

│ 3. Find block with PCTFREE space │

│ 4. Allocate row in block │

│ │

└─────────────────────────────────────┘B) Automatic Segment Space Management (ASSM) – Modern

┌─────────────────────────────────────┐

│ ASSM METHOD (Bitmap) │

├─────────────────────────────────────┤

│ │

│ 1. Check bitmap blocks │

│ 2. Find block with adequate space │

│ 3. Use level 1-3 bitmaps │

│ 4. More efficient, less contention │

│ │

└─────────────────────────────────────┘High Water Mark (HWM) Concept:

┌────────────────────────────────────────────────────────┐

│ TABLE SEGMENT │

├────────────────────────────────────────────────────────┤

│ │

│ ┌──────┬──────┬──────┬──────┬──────┬──────┬──────┐ │

│ │Block1│Block2│Block3│Block4│ │ │ │ │

│ │ Used │ Used │ Used │ Used │ Free │ Free │ Free │ │

│ └──────┴──────┴──────┴──────┴──────┴──────┴──────┘ │

│ ↑ │

│ High Water Mark │

│ │

│ • Full table scans read up to HWM │

│ • New INSERTs may use blocks below HWM if space exists│

│ • HWM moves forward, never backward │

│ │

└────────────────────────────────────────────────────────┘Check Table Block Usage:

SET LINESIZE 180

SET PAGESIZE 100

COLUMN owner FORMAT A15

COLUMN table_name FORMAT A30

COLUMN blocks FORMAT 999,999

COLUMN empty_blocks FORMAT 999,999

COLUMN avg_row_len FORMAT 9,999

SELECT owner,

table_name,

blocks,

empty_blocks,

num_rows,

avg_row_len,

ROUND((blocks * 8192)/1024/1024, 2) size_mb

FROM dba_tables

WHERE owner = 'HR'

AND table_name = 'EMPLOYEES';Analyze Block Space Usage:

-- Gather detailed segment statistics

EXEC DBMS_SPACE.SPACE_USAGE('HR','EMPLOYEES','TABLE', -

null, null);

-- View block allocation

SET LINESIZE 150

COLUMN segment_name FORMAT A30

COLUMN blocks FORMAT 999,999

COLUMN extents FORMAT 9,999

SELECT segment_name,

segment_type,

tablespace_name,

blocks,

extents,

ROUND(bytes/1024/1024, 2) size_mb

FROM dba_segments

WHERE owner = 'HR'

AND segment_name = 'EMPLOYEES';Step 7: Constraint Validation

Oracle validates all constraints defined on the table.

Constraint Types Checked:

┌────────────────────────────────────────────────────┐

│ CONSTRAINT VALIDATION ORDER │

├────────────────────────────────────────────────────┤

│ │

│ 1. NOT NULL Constraints │

│ └─ Check mandatory columns have values │

│ │

│ 2. CHECK Constraints │

│ └─ Validate business rules │

│ │

│ 3. UNIQUE Constraints │

│ └─ Ensure no duplicate values │

│ │

│ 4. PRIMARY KEY Constraints │

│ └─ Combination of NOT NULL + UNIQUE │

│ │

│ 5. FOREIGN KEY Constraints │

│ └─ Verify parent record exists │

│ │

└────────────────────────────────────────────────────┘Constraint Examples:

-- Create table with constraints

CREATE TABLE employees (

employee_id NUMBER(6) PRIMARY KEY,

first_name VARCHAR2(20) NOT NULL,

last_name VARCHAR2(25) NOT NULL,

email VARCHAR2(50) UNIQUE,

salary NUMBER(8,2) CHECK (salary > 0),

department_id NUMBER(4),

hire_date DATE DEFAULT SYSDATE,

CONSTRAINT emp_dept_fk

FOREIGN KEY (department_id)

REFERENCES departments(department_id)

);

-- NOT NULL violation

INSERT INTO employees (employee_id, last_name)

VALUES (101, 'Doe');

-- ORA-01400: cannot insert NULL into ("HR"."EMPLOYEES"."FIRST_NAME")

-- CHECK constraint violation

INSERT INTO employees (employee_id, first_name, last_name, salary)

VALUES (101, 'John', 'Doe', -5000);

-- ORA-02290: check constraint (HR.SYS_C007123) violated

-- UNIQUE constraint violation

INSERT INTO employees (employee_id, first_name, last_name, email)

VALUES (101, 'John', 'Doe', 'john@company.com');

INSERT INTO employees (employee_id, first_name, last_name, email)

VALUES (102, 'Jane', 'Smith', 'john@company.com');

-- ORA-00001: unique constraint (HR.SYS_C007124) violated

-- PRIMARY KEY violation

INSERT INTO employees (employee_id, first_name, last_name)

VALUES (101, 'John', 'Doe');

INSERT INTO employees (employee_id, first_name, last_name)

VALUES (101, 'Jane', 'Smith');

-- ORA-00001: unique constraint (HR.SYS_C007125) violated

-- FOREIGN KEY violation

INSERT INTO employees (employee_id, first_name, last_name, department_id)

VALUES (101, 'John', 'Doe', 999);

-- ORA-02291: integrity constraint (HR.EMP_DEPT_FK) violated - parent key not found

-- Correct INSERT

INSERT INTO employees (employee_id, first_name, last_name, email, salary, department_id)

VALUES (101, 'John', 'Doe', 'john@company.com', 50000, 10);

-- 1 row insertedView Table Constraints:

SET LINESIZE 180

SET PAGESIZE 100

COLUMN constraint_name FORMAT A30

COLUMN constraint_type FORMAT A10

COLUMN search_condition FORMAT A40

COLUMN r_constraint_name FORMAT A30

SELECT constraint_name,

DECODE(constraint_type,

'P', 'PRIMARY',

'U', 'UNIQUE',

'R', 'FOREIGN',

'C', 'CHECK',

constraint_type) constraint_type,

search_condition,

r_constraint_name,

status

FROM dba_constraints

WHERE owner = 'HR'

AND table_name = 'EMPLOYEES'

ORDER BY constraint_type;Step 8: Generate Redo Record

Oracle generates redo entries for crash recovery and replication.

Redo Record Components:

┌──────────────────────────────────────────────────────┐

│ REDO RECORD STRUCTURE │

├──────────────────────────────────────────────────────┤

│ │

│ 1. Change Vector │

│ • Operation type: INSERT │

│ • Object identifier (table) │

│ • Data block address (DBA) │

│ │

│ 2. SCN (System Change Number) │

│ • Unique identifier for change │

│ • Used for recovery ordering │

│ │

│ 3. Data Changes │

│ • New row data │

│ • Block header changes │

│ • Index changes │

│ │

│ 4. Transaction Information │

│ • Transaction ID │

│ • Undo segment information │

│ │

└──────────────────────────────────────────────────────┘Redo Generation Flow:

INSERT Statement

│

▼

┌──────────────────┐

│ Generate Redo │

│ Change Vector │

└────────┬─────────┘

│

▼

┌──────────────────┐

│ Write to Redo │

│ Log Buffer │

│ (Memory - SGA) │

└────────┬─────────┘

│

▼

┌──────────────────┐ COMMIT or

│ Wait for LGWR │◄─── Log Buffer Full or

│ (Log Writer) │ 3 Seconds Timeout

└────────┬─────────┘

│

▼

┌──────────────────┐

│ Write to Redo │

│ Log Files │

│ (Disk) │

└──────────────────┘Monitor Redo Generation:

SET LINESIZE 150

SET PAGESIZE 50

COLUMN name FORMAT A30

COLUMN value FORMAT 999,999,999,999

-- Check redo size generated

SELECT name, value

FROM v$sysstat

WHERE name IN ('redo size',

'redo writes',

'redo blocks written',

'redo write time');

-- Check redo log buffer usage

SELECT name, value

FROM v$sysstat

WHERE name LIKE '%redo%buffer%';View Redo Log Files:

SET LINESIZE 180

COLUMN member FORMAT A60

COLUMN status FORMAT A10

SELECT l.group#,

l.thread#,

l.sequence#,

l.bytes/1024/1024 size_mb,

l.status,

lf.member

FROM v$log l

JOIN v$logfile lf ON l.group# = lf.group#

ORDER BY l.group#;Check Current Redo Log:

SELECT group#,

sequence#,

bytes/1024/1024 size_mb,

members,

status

FROM v$log

WHERE status = 'CURRENT';Step 9: Insert Data into Buffer Cache

The actual row insertion happens in memory (Database Buffer Cache).

Buffer Cache Process:

┌────────────────────────────────────────────────────────┐

│ DATABASE BUFFER CACHE │

├────────────────────────────────────────────────────────┤

│ │

│ BEFORE INSERT: │

│ ┌─────────────────────────────────────┐ │

│ │ Data Block 1234 │ │

│ │ ┌─────────────────────────────────┐ │ │

│ │ │ Row 1: Emp 100, King, CEO │ │ │

│ │ │ Row 2: Emp 99, Smith, Manager │ │ │

│ │ │ Free Space: 4000 bytes │ │ │

│ │ └─────────────────────────────────┘ │ │

│ └─────────────────────────────────────┘ │

│ │

│ AFTER INSERT: │

│ ┌─────────────────────────────────────┐ │

│ │ Data Block 1234 (DIRTY) │ │

│ │ ┌─────────────────────────────────┐ │ │

│ │ │ Row 1: Emp 100, King, CEO │ │ │

│ │ │ Row 2: Emp 99, Smith, Manager │ │ │

│ │ │ Row 3: Emp 101, Doe, Analyst │ │ ← NEW ROW │

│ │ │ Free Space: 3920 bytes │ │ │

│ │ └─────────────────────────────────┘ │ │

│ │ Status: DIRTY (modified) │ │

│ │ SCN: 1234567 │ │

│ └─────────────────────────────────────┘ │

│ │

└────────────────────────────────────────────────────────┘Row Insertion Details:

- 1. Block Header Update: Row directory entry added

- 2. ROWID Assignment: Unique identifier for the row

- 3. Row Data Storage: Actual column values stored

- 4. Block SCN Update: System Change Number updated

- 5. Dirty Flag Set: Block marked for future write to disk

ROWID Structure:

ROWID Format: OOOOOOFFFBBBBBBRRR

Example: AAAHYtAAEAAAACXAAA

Where:

OOOOOO = Data Object Number (6 chars)

FFF = Relative File Number (3 chars)

BBBBBB = Block Number (6 chars)

RRR = Row Number (3 chars)View Buffer Cache Statistics:

SET LINESIZE 180

SET PAGESIZE 100

COLUMN name FORMAT A30

COLUMN value FORMAT 999,999,999,999

SELECT name, value

FROM v$sysstat

WHERE name IN ('db block gets',

'consistent gets',

'physical reads',

'physical writes',

'db block changes');

-- Calculate buffer cache hit ratio

SELECT ROUND((1 - (phy.value / (bg.value + cg.value))) * 100, 2) || '%'

AS "Buffer Cache Hit Ratio"

FROM v$sysstat phy,

v$sysstat bg,

v$sysstat cg

WHERE phy.name = 'physical reads'

AND bg.name = 'db block gets'

AND cg.name = 'consistent gets';View Dirty Blocks:

SELECT COUNT(*) dirty_blocks

FROM v$bh

WHERE status = 'xcur' -- Exclusive current (dirty)

AND dirty = 'Y';Step 10: Update Indexes

All indexes on the table must be updated with the new row entry.

Index Update Process:

Table: EMPLOYEES

├─ Primary Key Index: EMP_PK (employee_id)

├─ Unique Index: EMP_EMAIL_UK (email)

└─ Non-Unique Index: EMP_DEPT_IDX (department_id)

INSERT INTO employees VALUES (101, 'John', 'Doe', 'john@co.com', 50000, 10);

Index Updates Required:

┌──────────────────────────────────────┐

│ 1. EMP_PK Index │

│ Insert: 101 → ROWID(Block,Row) │

│ Check uniqueness │

│ │

│ 2. EMP_EMAIL_UK Index │

│ Insert: 'john@co.com' → ROWID │

│ Check uniqueness │

│ │

│ 3. EMP_DEPT_IDX Index │

│ Insert: 10 → ROWID │

│ (no uniqueness check) │

└──────────────────────────────────────┘Impact of Multiple Indexes:

-- Table with many indexes (example)

CREATE TABLE test_table (

id NUMBER PRIMARY KEY,

col1 VARCHAR2(100),

col2 VARCHAR2(100),

col3 DATE,

col4 NUMBER

);

CREATE INDEX idx1 ON test_table(col1);

CREATE INDEX idx2 ON test_table(col2);

CREATE INDEX idx3 ON test_table(col3);

CREATE INDEX idx4 ON test_table(col4);

CREATE INDEX idx5 ON test_table(col1, col2);

-- Single INSERT must update:

-- 1 Primary Key Index + 5 Secondary Indexes = 6 index updates!

-- More indexes = Slower INSERT performanceMonitor Index Usage and Cost:

SET LINESIZE 180

SET PAGESIZE 100

COLUMN table_name FORMAT A20

COLUMN index_name FORMAT A30

COLUMN uniqueness FORMAT A10

COLUMN status FORMAT A10

SELECT table_name,

index_name,

uniqueness,

status,

num_rows,

distinct_keys,

leaf_blocks,

blevel

FROM dba_indexes

WHERE owner = 'HR'

AND table_name = 'EMPLOYEES'

ORDER BY index_name;Check Index Fragmentation:

ANALYZE INDEX hr.emp_pk VALIDATE STRUCTURE;

SELECT name,

height,

lf_rows,

del_lf_rows,

ROUND((del_lf_rows / NULLIF(lf_rows,0)) * 100, 2) pct_deleted

FROM index_stats;Tip: For bulk inserts, consider:

- Dropping indexes before insert

- Rebuilding indexes after insert

- Using `APPEND` hint to bypass index maintenance temporarily

Step 11: Fire Triggers (If Defined)

If triggers are defined on the table, they execute at specific points.

Trigger Types and Timing:

┌────────────────────────────────────────────────────────┐

│ TRIGGER EXECUTION ORDER │

├────────────────────────────────────────────────────────┤

│ │

│ 1. BEFORE STATEMENT Trigger │

│ └─ Executes once before INSERT │

│ │

│ 2. BEFORE ROW Trigger │

│ └─ Executes for each row being inserted │

│ └─ Can modify :NEW values │

│ │

│ 3. ACTUAL INSERT OPERATION │

│ └─ Row inserted into table │

│ │

│ 4. AFTER ROW Trigger │

│ └─ Executes for each row inserted │

│ └─ Cannot modify :NEW values │

│ │

│ 5. AFTER STATEMENT Trigger │

│ └─ Executes once after all rows inserted │

│ │

└────────────────────────────────────────────────────────┘Trigger Examples:

-- Auto-generate primary key

CREATE OR REPLACE TRIGGER emp_bir

BEFORE INSERT ON employees

FOR EACH ROW

BEGIN

IF :NEW.employee_id IS NULL THEN

SELECT emp_seq.NEXTVAL INTO :NEW.employee_id FROM dual;

END IF;

END;

/

-- Audit trigger

CREATE OR REPLACE TRIGGER emp_air

AFTER INSERT ON employees

FOR EACH ROW

BEGIN

INSERT INTO audit_log (

table_name,

operation,

username,

timestamp,

new_values

) VALUES (

'EMPLOYEES',

'INSERT',

USER,

SYSDATE,

'EmpID: ' || :NEW.employee_id

);

END;

/

-- Validation trigger

CREATE OR REPLACE TRIGGER emp_salary_check

BEFORE INSERT ON employees

FOR EACH ROW

BEGIN

IF :NEW.salary < 1000 THEN

RAISE_APPLICATION_ERROR(-20001,

'Salary must be at least 1000');

END IF;

END;

/

-- Test the triggers

INSERT INTO employees (first_name, last_name, salary, department_id)

VALUES ('John', 'Doe', 50000, 10);

-- Trigger emp_bir: Generates employee_id automatically

-- INSERT operation: Inserts the row

-- Trigger emp_air: Logs to audit_log table

-- Success: 1 row insertedView Triggers on Table:

SET LINESIZE 200

SET PAGESIZE 100

COLUMN trigger_name FORMAT A30

COLUMN triggering_event FORMAT A20

COLUMN status FORMAT A10

SELECT trigger_name,

trigger_type,

triggering_event,

status,

SUBSTR(trigger_body, 1, 50) || '...' body_preview

FROM dba_triggers

WHERE owner = 'HR'

AND table_name = 'EMPLOYEES'

ORDER BY trigger_name;Disable/Enable Triggers:

sql

-- Disable specific trigger

ALTER TRIGGER hr.emp_bir DISABLE;

-- Disable all triggers on table

ALTER TABLE hr.employees DISABLE ALL TRIGGERS;

-- Enable trigger

ALTER TRIGGER hr.emp_bir ENABLE;

-- Enable all triggers

ALTER TABLE hr.employees ENABLE ALL TRIGGERS;Performance Tip: Triggers add overhead to INSERT operations. For bulk inserts:

- Consider disabling triggers temporarily

- Re-enable after bulk load completes

- Manually run validation/audit logic if needed

Step 12: Return Success Message

After all operations complete successfully, Oracle returns a success message.

Transaction State at This Point:

┌────────────────────────────────────────────────────────┐

│ TRANSACTION STATE: UNCOMMITTED │

├────────────────────────────────────────────────────────┤

│ │

│ Changes Made (in memory): │

│ ✓ Row inserted in Buffer Cache │

│ ✓ Redo records in Redo Log Buffer │

│ ✓ Undo records created │

│ ✓ Indexes updated in Buffer Cache │

│ ✓ Locks acquired and held │

│ │

│ What's on Disk: │

│ ✗ Table data NOT written to datafile yet │

│ ✗ Index data NOT written to datafile yet │

│ ✓ Redo MAY be written (if buffer flushed) │

│ │

│ Visibility: │

│ ✓ Current session can see changes │

│ ✗ Other sessions CANNOT see changes │

│ ✗ Changes NOT permanent yet │

│ │

│ Next Steps Required: │

│ → COMMIT to make changes permanent │

│ → ROLLBACK to discard changes │

│ │

└────────────────────────────────────────────────────────┘

Test Transaction Isolation:-- Session 1

INSERT INTO employees (employee_id, first_name, last_name, salary)

VALUES (101, 'John', 'Doe', 50000);

-- 1 row inserted (not committed)

SELECT employee_id, first_name FROM employees WHERE employee_id = 101;

-- Result: 101, John (visible to Session 1)

-- Session 2 (different user or connection)

SELECT employee_id, first_name FROM employees WHERE employee_id = 101;

-- Result: No rows found (not visible to Session 2)

-- Session 1

COMMIT;

-- Commit complete

-- Session 2

SELECT employee_id, first_name FROM employees WHERE employee_id = 101;

-- Result: 101, John (now visible to all sessions)Check Uncommitted Transactions:

sql

SET LINESIZE 180

COLUMN username FORMAT A15

COLUMN status FORMAT A10

COLUMN sql_text FORMAT A60

SELECT s.sid,

s.serial#,

s.username,

s.status,

t.start_time,

t.used_ublk,

sq.sql_text

FROM v$transaction t

JOIN v$session s ON t.ses_addr = s.saddr

LEFT JOIN v$sql sq ON s.sql_id = sq.sql_id

WHERE s.username IS NOT NULL;Step 13A: COMMIT Process

When you issue COMMIT, Oracle makes all changes permanent.

COMMIT Execution Flow:

USER ISSUES: COMMIT;

│

▼

┌──────────────────────────────────────┐

│ 1. Generate Commit SCN │

│ • Unique system change number │

│ • Marks point of consistency │

└────────────┬─────────────────────────┘

│

▼

┌──────────────────────────────────────┐

│ 2. Write Redo to Disk (LGWR) │

│ • Force redo log buffer flush │

│ • Write commit record to redo │

│ • Wait for write completion │

└────────────┬─────────────────────────┘

│

▼

┌──────────────────────────────────────┐

│ 3. Release Locks │

│ • Release TX (row) locks │

│ • Release TM (table) locks │

│ • Other sessions can proceed │

└────────────┬─────────────────────────┘

│

▼

┌──────────────────────────────────────┐

│ 4. Mark Transaction Complete │

│ • Update transaction table │

│ • Clear transaction ID │

│ • Return "Commit complete" │

└────────────┬─────────────────────────┘

│

▼

┌──────────────────────────────────────┐

│ 5. Background Process (DBWR) │

│ • Eventually writes dirty blocks │

│ • Writes to datafiles │

│ • Done asynchronously │

└──────────────────────────────────────┘Important: COMMIT does NOT immediately write data blocks to disk. It only guarantees redo is written, which is sufficient for recovery.

Monitor COMMIT Performance:

SET LINESIZE 150

COLUMN name FORMAT A40

COLUMN value FORMAT 999,999,999

SELECT name, value

FROM v$sysstat

WHERE name IN ('user commits',

'transaction rollbacks',

'redo writes',

'redo write time'

);Step 13B: ROLLBACK Process

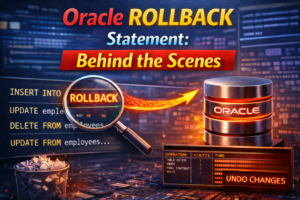

If you issue ROLLBACK, Oracle discards all changes.

ROLLBACK Execution Flow:

USER ISSUES: ROLLBACK;

│

▼

┌──────────────────────────────────────┐

│ 1. Read Undo Segment │

│ • Locate undo records │

│ • Read "before image" data │

└────────────┬─────────────────────────┘

│

▼

┌──────────────────────────────────────┐

│ 2. Restore Original Values │

│ • Apply undo to buffer cache │

│ • Remove inserted row │

│ • Restore block to previous state│

└────────────┬─────────────────────────┘

│

▼

┌──────────────────────────────────────┐

│ 3. Generate Redo for Rollback │

│ • Yes, rollback is logged! │

│ • Ensures recoverability │

└────────────┬─────────────────────────┘

│

▼

┌──────────────────────────────────────┐

│ 4. Release Locks │

│ • Release all locks │

│ • Other sessions can proceed │

└────────────┬─────────────────────────┘

│

▼

┌──────────────────────────────────────┐

│ 5. Mark Transaction Aborted │

│ • Clear transaction ID │

│ • Free undo space │

│ • Return "Rollback complete" │

└──────────────────────────────────────┘Example:

-- Start transaction

INSERT INTO employees (employee_id, first_name, last_name, salary)

VALUES (101, 'John', 'Doe', 50000);

-- 1 row inserted

-- Check the data

SELECT employee_id, first_name FROM employees WHERE employee_id = 101;

-- Result: 101, John

-- Decide to cancel

ROLLBACK;

-- Rollback complete

-- Verify data is gone

SELECT employee_id, first_name FROM employees WHERE employee_id = 101;

-- Result: No rows foundPerformance Optimization for INSERT Operations

1. Use Bulk INSERT Operations

Single Row INSERT (Slow):

BEGIN

FOR i IN 1..10000 LOOP

INSERT INTO employees VALUES (i, 'Name'||i, 'Last'||i, 50000, 10);

END LOOP;

COMMIT;

END;

/

-- Very slow: 10,000 individual INSERT operationsBulk INSERT with FORALL (Fast):

DECLARE

TYPE t_emp_id IS TABLE OF NUMBER INDEX BY PLS_INTEGER;

TYPE t_fname IS TABLE OF VARCHAR2(20) INDEX BY PLS_INTEGER;

l_emp_ids t_emp_id;

l_fnames t_fname;

BEGIN

FOR i IN 1..10000 LOOP

l_emp_ids(i) := i;

l_fnames(i) := 'Name' || i;

END LOOP;

FORALL i IN 1..10000

INSERT INTO employees (employee_id, first_name, last_name, salary, department_id)

VALUES (l_emp_ids(i), l_fnames(i), 'Last'||i, 50000, 10);

COMMIT;

END;

/

-- Much faster: Bulk operation with single context switchDirect Path INSERT (Fastest):

INSERT /*+ APPEND */ INTO employees

SELECT employee_id, first_name, last_name, salary, department_id

FROM temp_employees;

COMMIT;Benefits:

- Writes directly above HWM

- Minimal redo generation

- No index maintenance during insert (indexes invalidated)

- Requires exclusive lock on table

2. Disable Constraints and Triggers for Bulk Loads

-- Disable constraints

ALTER TABLE employees DISABLE CONSTRAINT emp_dept_fk;

ALTER TABLE employees DISABLE CONSTRAINT emp_email_uk;

-- Disable triggers

ALTER TABLE employees DISABLE ALL TRIGGERS;

-- Perform bulk insert

INSERT /*+ APPEND */ INTO employees

SELECT * FROM external_data;

COMMIT;

-- Re-enable constraints (validates data)

ALTER TABLE employees ENABLE CONSTRAINT emp_dept_fk;

ALTER TABLE employees ENABLE CONSTRAINT emp_email_uk;

-- Re-enable triggers

ALTER TABLE employees ENABLE ALL TRIGGERS;3. Use NOLOGGING for Large Inserts

-- Set table to NOLOGGING

ALTER TABLE employees NOLOGGING;

-- Perform insert with minimal redo

INSERT /*+ APPEND */ INTO employees

SELECT * FROM staging_table;

COMMIT;

-- Set back to LOGGING

ALTER TABLE employees LOGGING;

-- Note: Take backup after bulk load

-- NOLOGGING operations are not recoverable4. Optimize Index Strategy

-- For large bulk inserts:

-- Step 1: Drop indexes

DROP INDEX emp_dept_idx;

DROP INDEX emp_salary_idx;

-- Step 2: Perform bulk insert

INSERT /*+ APPEND */ INTO employees

SELECT * FROM source_data;

COMMIT;

-- Step 3: Rebuild indexes (faster than maintaining during insert)

CREATE INDEX emp_dept_idx ON employees(department_id) NOLOGGING;

CREATE INDEX emp_salary_idx ON employees(salary) NOLOGGING;

-- Step 4: Gather statistics

EXEC DBMS_STATS.GATHER_TABLE_STATS('HR', 'EMPLOYEES', CASCADE => TRUE);5. Use Partitioning for Very Large Tables

-- Create partitioned table

CREATE TABLE employees (

employee_id NUMBER,

first_name VARCHAR2(20),

hire_date DATE,

department_id NUMBER

)

PARTITION BY RANGE (hire_date) (

PARTITION emp_2023 VALUES LESS THAN (TO_DATE('2024-01-01', 'YYYY-MM-DD')),

PARTITION emp_2024 VALUES LESS THAN (TO_DATE('2025-01-01', 'YYYY-MM-DD')),

PARTITION emp_2025 VALUES LESS THAN (TO_DATE('2026-01-01', 'YYYY-MM-DD'))

);

-- Insert into specific partition (faster)

INSERT INTO employees PARTITION (emp_2025)

VALUES (101, 'John', TO_DATE('2025-06-15', 'YYYY-MM-DD'), 10);6. Monitor and Tune INSERT Performance

Track INSERT Statistics:

SET LINESIZE 200

COLUMN sql_text FORMAT A60

COLUMN executions FORMAT 999,999

COLUMN rows_processed FORMAT 999,999,999

COLUMN elapsed_sec FORMAT 999,990.99

SELECT sql_id,

sql_text,

executions,

rows_processed,

ROUND(elapsed_time/1000000, 2) elapsed_sec,

ROUND(cpu_time/1000000, 2) cpu_sec,

buffer_gets,

disk_reads,

ROUND(rows_processed/NULLIF(elapsed_time/1000000,0), 2) rows_per_sec

FROM v$sql

WHERE UPPER(sql_text) LIKE '%INSERT INTO%'

AND sql_text NOT LIKE '%v$sql%'

AND executions > 0

ORDER BY elapsed_time DESC

FETCH FIRST 10 ROWS ONLY;Check Wait Events:

SET LINESIZE 180

COLUMN event FORMAT A40

COLUMN total_waits FORMAT 999,999,999

COLUMN time_waited_sec FORMAT 999,999.99

SELECT event,

total_waits,

ROUND(time_waited/100, 2) time_waited_sec,

ROUND(average_wait/100, 4) avg_wait_sec

FROM v$system_event

WHERE event LIKE '%log%'

OR event LIKE '%write%'

OR event LIKE '%lock%'

ORDER BY time_waited DESC

FETCH FIRST 15 ROWS ONLY;Common INSERT Errors and Solutions

Error: ORA-00001 (Unique Constraint Violated)

-- Problem

INSERT INTO employees (employee_id, first_name, last_name)

VALUES (100, 'John', 'Doe');

-- ORA-00001: unique constraint (HR.EMP_PK) violated

-- Solution 1: Check existing data

SELECT employee_id FROM employees WHERE employee_id = 100;

-- Solution 2: Use different value

INSERT INTO employees (employee_id, first_name, last_name)

VALUES (101, 'John', 'Doe');

-- Solution 3: Use sequence

INSERT INTO employees (employee_id, first_name, last_name)

VALUES (emp_seq.NEXTVAL, 'John', 'Doe');Error: ORA-01400 (Cannot Insert NULL)

-- Problem

INSERT INTO employees (employee_id, last_name)

VALUES (101, 'Doe');

-- ORA-01400: cannot insert NULL into ("HR"."EMPLOYEES"."FIRST_NAME")

-- Solution: Provide value for NOT NULL column

INSERT INTO employees (employee_id, first_name, last_name)

VALUES (101, 'John', 'Doe');Error: ORA-02291 (Integrity Constraint Violated – Parent Key Not Found)

-- Problem

INSERT INTO employees (employee_id, first_name, last_name, department_id)

VALUES (101, 'John', 'Doe', 999);

-- ORA-02291: integrity constraint (HR.EMP_DEPT_FK) violated

-- Solution 1: Check parent table

SELECT department_id FROM departments WHERE department_id = 999;

-- No rows found

-- Solution 2: Use valid foreign key

SELECT department_id FROM departments;

-- Result: 10, 20, 30, 40

INSERT INTO employees (employee_id, first_name, last_name, department_id)

VALUES (101, 'John', 'Doe', 10);

-- SuccessError: ORA-01654 (Unable to Extend Index)

-- Problem: Index tablespace full

INSERT INTO employees VALUES (...);

-- ORA-01654: unable to extend index HR.EMP_PK

-- Solution 1: Add datafile to tablespace

ALTER TABLESPACE users ADD DATAFILE

'/u01/app/oracle/oradata/orcl/users02.dbf' SIZE 100M;

-- Solution 2: Resize existing datafile

ALTER DATABASE DATAFILE '/u01/app/oracle/oradata/orcl/users01.dbf'

RESIZE 500M;

-- Solution 3: Enable autoextend

ALTER DATABASE DATAFILE '/u01/app/oracle/oradata/orcl/users01.dbf'

AUTOEXTEND ON NEXT 10M MAXSIZE UNLIMITED;Error: ORA-30036 (Unable to Extend Undo Segment)

-- Problem: Undo tablespace full

INSERT INTO large_table SELECT * FROM source_table;

-- ORA-30036: unable to extend segment by 8 in undo tablespace 'UNDOTBS1'

-- Solution 1: Add datafile to undo tablespace

ALTER TABLESPACE undotbs1 ADD DATAFILE

'/u01/app/oracle/oradata/orcl/undotbs02.dbf' SIZE 1G;

-- Solution 2: Increase undo retention (if needed)

ALTER SYSTEM SET undo_retention = 900; -- 15 minutes

-- Solution 3: Commit more frequently for large operations

BEGIN

FOR i IN 1..1000000 LOOP

INSERT INTO large_table VALUES (...);

IF MOD(i, 10000) = 0 THEN

COMMIT; -- Commit every 10,000 rows

END IF;

END LOOP;

COMMIT;

END;Best Practices for INSERT Operations

1. Always Use Bind Variables

-- Bad (hard parse for each execution)

INSERT INTO employees VALUES (101, 'John', 'Doe', 50000);

INSERT INTO employees VALUES (102, 'Jane', 'Smith', 60000);

-- Good (single hard parse, reused plan)

INSERT INTO employees VALUES (:emp_id, :fname, :lname, :sal);2. Commit at Appropriate Intervals

-- For OLTP: Commit after each business transaction

INSERT INTO orders VALUES (...);

INSERT INTO order_items VALUES (...);

COMMIT; -- Small, frequent commits

-- For Batch Loads: Commit in batches

BEGIN

FOR i IN 1..1000000 LOOP

INSERT INTO staging VALUES (...);

IF MOD(i, 50000) = 0 THEN

COMMIT; -- Commit every 50,000 rows

END IF;

END LOOP;

COMMIT;

END;3. Use Appropriate INSERT Method

-- Single row: Regular INSERT

INSERT INTO employees VALUES (101, 'John', 'Doe', 50000);

-- Multiple rows from SELECT: INSERT...SELECT

INSERT INTO employees_archive

SELECT * FROM employees WHERE hire_date < '2020-01-01';

-- Bulk load: Direct path with APPEND

INSERT /*+ APPEND */ INTO employees

SELECT * FROM external_table;4. Monitor Resource Usage

-- Create monitoring script

SET LINESIZE 180

SET PAGESIZE 100

PROMPT ========================================

PROMPT INSERT OPERATION MONITORING

PROMPT ========================================

PROMPT Undo Usage:

SELECT tablespace_name,

ROUND(SUM(bytes)/1024/1024, 2) used_mb

FROM dba_undo_extents

WHERE status = 'ACTIVE'

GROUP BY tablespace_name;

PROMPT

PROMPT Active Transactions:

SELECT s.username, t.used_ublk, t.start_time

FROM v$transaction t

JOIN v$session s ON t.ses_addr = s.saddr;

PROMPT

PROMPT Locked Objects:

SELECT object_name, locked_mode

FROM v$locked_object lo

JOIN dba_objects o ON lo.object_id = o.object_id;5. Handle Errors Gracefully

-- Use exception handling in PL/SQL

BEGIN

INSERT INTO employees (employee_id, first_name, last_name, salary)

VALUES (101, 'John', 'Doe', 50000);

COMMIT;

EXCEPTION

WHEN DUP_VAL_ON_INDEX THEN

DBMS_OUTPUT.PUT_LINE('Error: Duplicate employee ID');

ROLLBACK;

WHEN OTHERS THEN

DBMS_OUTPUT.PUT_LINE('Error: ' || SQLERRM);

ROLLBACK;

END;

/Quick Reference Card

INSERT Syntax Variations

-- Basic INSERT

INSERT INTO table_name VALUES (val1, val2, val3);

-- INSERT with column list

INSERT INTO table_name (col1, col2) VALUES (val1, val2);

-- INSERT multiple rows (Oracle 23c+)

INSERT INTO employees VALUES

(101, 'John', 'Doe'),

(102, 'Jane', 'Smith'),

(103, 'Bob', 'Johnson');

-- INSERT from SELECT

INSERT INTO target_table

SELECT * FROM source_table WHERE condition;

-- INSERT with subquery

INSERT INTO employees (employee_id, first_name, department_name)

SELECT emp_seq.NEXTVAL, first_name,

(SELECT department_name FROM departments WHERE department_id = 10)

FROM temp_employees;

-- INSERT with DEFAULT values

INSERT INTO employees (employee_id, first_name, hire_date)

VALUES (101, 'John', DEFAULT); -- Uses column default

-- INSERT with RETURNING clause

INSERT INTO employees (employee_id, first_name, last_name)

VALUES (emp_seq.NEXTVAL, 'John', 'Doe')

RETURNING employee_id INTO :new_id;Conclusion

Understanding the internal execution of an INSERT statement is crucial for effective Oracle database management and performance tuning. From the initial syntax check through constraint validation, redo generation, and final commit or rollback, each step plays a vital role in maintaining data integrity and consistency.

Key Takeaways:

- INSERT is not immediate – Changes are in memory until COMMIT

- Undo segments are crucial – Enable ROLLBACK capability and read consistency

- Redo logs ensure recoverability – Even if data blocks aren’t written to disk yet

- Indexes add overhead – Each index must be updated during INSERT

- Constraints are validated – Before the row is physically inserted

- Locks are automatically acquired – Released only at COMMIT or ROLLBACK

- Bulk operations are faster – Use FORALL, APPEND hint, or direct path loads

- Transaction control matters – Commit at appropriate intervals

By mastering these concepts and using the monitoring scripts provided, you’ll be well-prepared for database administration interviews and equipped to optimize INSERT performance in production environments.

Remember: A well-understood INSERT process is the foundation of efficient data manipulation and transaction management!